Work on 2015-07-20

Tags: ceph

I spent the last few days learning about the openSUSE Ceph installation process. I ran into some issues, and I’m not done yet, so these are just my working notes for now. Once complete, I’ll write up the process on my regular blog.

Prerequisite: build a tool to build and destroy small clusters quickly

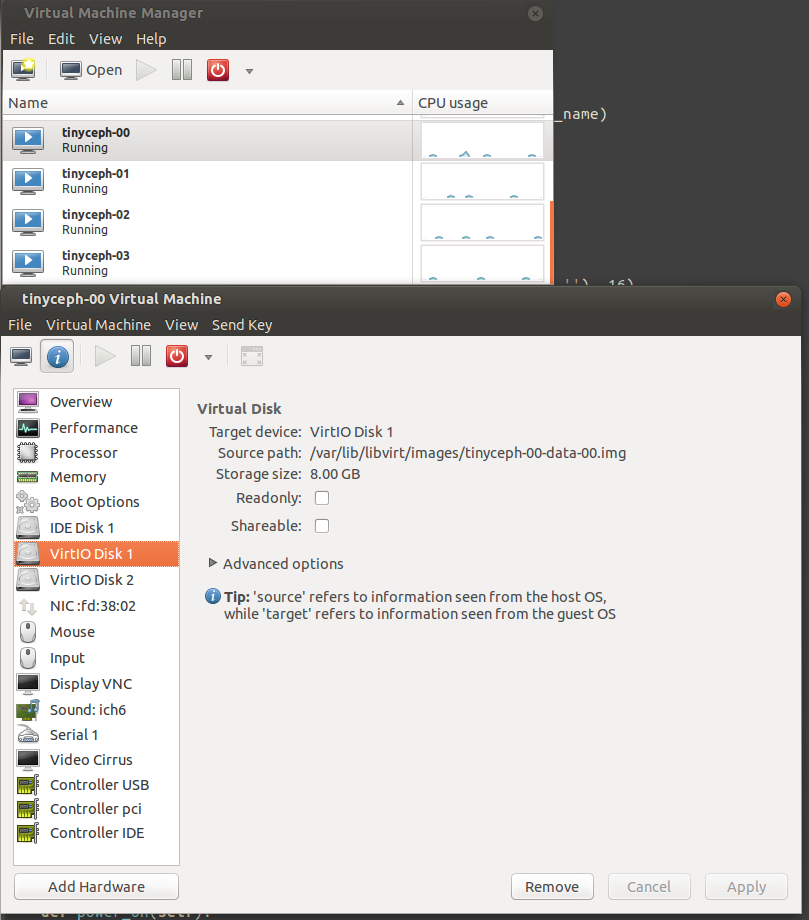

I needed a way to quickly provision and destroy virtual machines that were well suited to run small Ceph clusters. I mostly run libvirt / kvm in my home lab, and I didn’t find any solutions tailored to that platform, so I wrote ceph-libvirt-clusterer.

Ceph-libvirt-clusterer lets me clone a template virtual machine and attach as many OSD disks as I’d like in the process. I’m really happy with the tool considering that I only have a day’s worth of work in it, and I got to learn some details of the libvirt API and python bindings in the process.

Build a template machine

I built a template machine with openSUSE’s tumbleweed and completed the following preliminary configurations:

- created ceph user

- ceph user has a SSH key

- ceph user’s public key is in the ceph user’s authorized_keys file

- ceph user is configured for passwordless sudo

- emacs is installed (not strictly necessary :-) )

Provision a cluster

I used ceph-libvirt-clusterer to create a four node cluster, and each node had two 8GB OSD drives attached.

Install Ceph with ceph-deploy

Once the machines were built, I followed the SUSE Enterprise Storage Documentation

The ceph packages aren’t yet in the mainline repositories, so I added it to the admin node:

$ sudo zypper ar -f http://download.opensuse.org/repositories/filesystems:/ceph/openSUSE_Tumbleweed/ ceph

$ sudo zypper update

Retrieving repository 'ceph' metadata ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------[\]

New repository or package signing key received:

Repository: ceph

Key Name: filesystems OBS Project <filesystems@build.opensuse.org>

Key Fingerprint: B1FB5374 87204722 05FA6019 98C97FE7 324E6311

Key Created: Mon 12 May 2014 10:34:19 AM EDT

Key Expires: Wed 20 Jul 2016 10:34:19 AM EDT

Rpm Name: gpg-pubkey-324e6311-5370dbeb

Do you want to reject the key, trust temporarily, or trust always? [r/t/a/? shows all options] (r): a

Retrieving repository 'ceph' metadata .........................................................................................................................................................................[done]

Building repository 'ceph' cache ..............................................................................................................................................................................[done]

Loading repository data...

Reading installed packages...

And ceph packages were visible:

tim@linux-7d21:~> zypper search ceph

Loading repository data...

Reading installed packages...

S | Name | Summary | Type

--+--------------------+---------------------------------------------------+-----------

| ceph | User space components of the Ceph file system | package

| ceph | User space components of the Ceph file system | srcpackage

| ceph-common | Ceph Common | package

| ceph-deploy | Admin and deploy tool for Ceph | package

| ceph-deploy | Admin and deploy tool for Ceph | srcpackage

| ceph-devel-compat | Compatibility package for Ceph headers | package

| ceph-fuse | Ceph fuse-based client | package

| ceph-libs-compat | Meta package to include ceph libraries | package

| ceph-radosgw | Rados REST gateway | package

| ceph-test | Ceph benchmarks and test tools | package

| libcephfs1 | Ceph distributed file system client library | package

| libcephfs1-devel | Ceph distributed file system headers | package

| python-ceph-compat | Compatibility package for Cephs python libraries | package

| python-cephfs | Python libraries for Ceph distributed file system | package

First issue: python was missing on the other nodes

When I installed ceph-deploy on the admin node, python was also installed. The other nodes were still running with a bare minimum configuration from the tumbleweed install, so python was missing, and ceph-deploy’s install step failed.

I installed Ansible to correct the problem on all nodes simultaneously, but Ansible requires python on the remote side, too. That meant I had to manually install python on the remaining three nodes just like sysadmins had to do years ago.

Second issue: all nodes need the OBS repository

I didn’t add the OBS repository to the remaining three nodes because I wanted to see if ceph-deploy would add it automatically. I didn’t expect that to be the case, but since this version of ceph-deploy came directly from SUSE, there was a chance.

Fortunately Ansible works now:

ceph@linux-7d21:~/tinyceph> ansible -i ansible-inventory all -a "sudo zypper ar -f http://download.opensuse.org/repositories/filesystems:/ceph/openSUSE_Tumbleweed/ ceph"

192.168.122.122 | success | rc=0 >>

Adding repository 'ceph' [......done]

Repository 'ceph' successfully added

Enabled : Yes

Autorefresh : Yes

GPG Check : Yes

URI : http://download.opensuse.org/repositories/filesystems:/ceph/openSUSE_Tumbleweed/

# and three more nodes worth of output...

ceph@linux-7d21:~/tinyceph> ansible -i ansible-inventory all -a "sudo zypper --gpg-auto-import-keys update"

Once both of these commands completed, ceph-deploy install worked as expected.

Third issue: I was using IP addresses

ceph-deploy new complains when provided with IP addresses:

ceph@linux-7d21:~/tinyceph> ceph-deploy new 192.168.122.121 192.168.122.122 192.168.122.123 192.168.122.124

usage: ceph-deploy new [-h] [--no-ssh-copykey] [--fsid FSID]

[--cluster-network CLUSTER_NETWORK]

[--public-network PUBLIC_NETWORK]

MON [MON ...]

ceph-deploy new: error: 192.168.122.121 must be a hostname not an IP

In the future, it’d be pretty cool if ceph-libvirt-clusterer supported updating DNS records so I didn’t need to resort to the host file ansible playbook that I used today:

---

- hosts: all

sudo: yes

tasks:

- name: add tinyceph-00

lineinfile: dest=/etc/hosts line='192.168.122.121 tinyceph-00'

- name: add tinyceph-01

lineinfile: dest=/etc/hosts line='192.168.122.122 tinyceph-01'

- name: add tinyceph-02

lineinfile: dest=/etc/hosts line='192.168.122.123 tinyceph-02'

- name: add tinyceph-03

lineinfile: dest=/etc/hosts line='192.168.122.124 tinyceph-03'

- hosts: 192.168.122.121

sudo: yes

tasks:

- name: update hostname

lineinfile: dest=/etc/hostname line='tinyceph-00' state=present regexp=linux-7d21

- hosts: 192.168.122.122

sudo: yes

tasks:

- name: update hostname

lineinfile: dest=/etc/hostname line='tinyceph-01' state=present regexp=linux-7d21

- hosts: 192.168.122.123

sudo: yes

tasks:

- name: update hostname

lineinfile: dest=/etc/hostname line='tinyceph-02' state=present regexp=linux-7d21

- hosts: 192.168.122.124

sudo: yes

tasks:

- name: update hostname

lineinfile: dest=/etc/hostname line='tinyceph-03' state=present regexp=linux-7d21

Fourth issue: tumbleweed uses systemd, but ceph-deploy doesn’t expect that

[ceph_deploy.mon][INFO ] distro info: openSUSE 20150714 x86_64

[tinyceph-03][DEBUG ] determining if provided host has same hostname in remote

[tinyceph-03][DEBUG ] get remote short hostname

[tinyceph-03][DEBUG ] deploying mon to tinyceph-03

[tinyceph-03][DEBUG ] get remote short hostname

[tinyceph-03][DEBUG ] remote hostname: tinyceph-03

[tinyceph-03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[tinyceph-03][DEBUG ] create the mon path if it does not exist

[tinyceph-03][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-tinyceph-03/done

[tinyceph-03][DEBUG ] create a done file to avoid re-doing the mon deployment

[tinyceph-03][DEBUG ] create the init path if it does not exist

[tinyceph-03][INFO ] Running command: sudo /etc/init.d/ceph -c /etc/ceph/ceph.conf start mon.tinyceph-03

[tinyceph-03][ERROR ] Traceback (most recent call last):

[tinyceph-03][ERROR ] File "/usr/lib/python2.7/site-packages/remoto/process.py", line 94, in run

[tinyceph-03][ERROR ] reporting(conn, result, timeout)

[tinyceph-03][ERROR ] File "/usr/lib/python2.7/site-packages/remoto/log.py", line 13, in reporting

[tinyceph-03][ERROR ] received = result.receive(timeout)

[tinyceph-03][ERROR ] File "/usr/lib/python2.7/site-packages/execnet/gateway_base.py", line 701, in receive

[tinyceph-03][ERROR ] raise self._getremoteerror() or EOFError()

[tinyceph-03][ERROR ] RemoteError: Traceback (most recent call last):

[tinyceph-03][ERROR ] File "<string>", line 1033, in executetask

[tinyceph-03][ERROR ] File "<remote exec>", line 12, in _remote_run

[tinyceph-03][ERROR ] File "/usr/lib64/python2.7/subprocess.py", line 710, in __init__

[tinyceph-03][ERROR ] errread, errwrite)

[tinyceph-03][ERROR ] File "/usr/lib64/python2.7/subprocess.py", line 1335, in _execute_child

[tinyceph-03][ERROR ] raise child_exception

[tinyceph-03][ERROR ] OSError: [Errno 2] No such file or directory

[tinyceph-03][ERROR ]

[tinyceph-03][ERROR ]

[ceph_deploy.mon][ERROR ] Failed to execute command: /etc/init.d/ceph -c /etc/ceph/ceph.conf start mon.tinyceph-03

[ceph_deploy][ERROR ] GenericError: Failed to create 4 monitors

Sure enough, a little manual inspection revealed no file at /etc/init.d/ceph and systemd integration:

ceph@tinyceph-00:~/tinyceph> ls -la /etc/init.d/ceph

ls: cannot access /etc/init.d/ceph: No such file or directory

ceph@tinyceph-00:~/tinyceph> sudo service ceph status

* ceph.target - ceph target allowing to start/stop all ceph*@.service instances at once

Loaded: loaded (/usr/lib/systemd/system/ceph.target; disabled; vendor preset: disabled)

Active: inactive (dead)

Jul 19 23:50:35 tinyceph-00 systemd[1]: Reached target ceph target allowing to start/stop all ceph*@.service instances at once.

Jul 19 23:50:35 tinyceph-00 systemd[1]: Starting ceph target allowing to start/stop all ceph*@.service instances at once.

Jul 19 23:50:47 tinyceph-00 systemd[1]: Stopped target ceph target allowing to start/stop all ceph*@.service instances at once.

Jul 19 23:50:47 tinyceph-00 systemd[1]: Stopping ceph target allowing to start/stop all ceph*@.service instances at once.

ceph@tinyceph-00:~/tinyceph> sudo service ceph start

ceph@tinyceph-00:~/tinyceph> sudo service ceph status

* ceph.target - ceph target allowing to start/stop all ceph*@.service instances at once

Loaded: loaded (/usr/lib/systemd/system/ceph.target; disabled; vendor preset: disabled)

Active: active since Mon 2015-07-20 00:24:01 EDT; 4s ago

Jul 20 00:24:01 tinyceph-00 systemd[1]: Reached target ceph target allowing to start/stop all ceph*@.service instances at once.

Jul 20 00:24:01 tinyceph-00 systemd[1]: Starting ceph target allowing to start/stop all ceph*@.service instances at once.

I learned that this is a known bug, and I’ll try all of this again with an older version of openSUSE.

… and that’s where I’m calling it a night. I’ll be back at it this week.